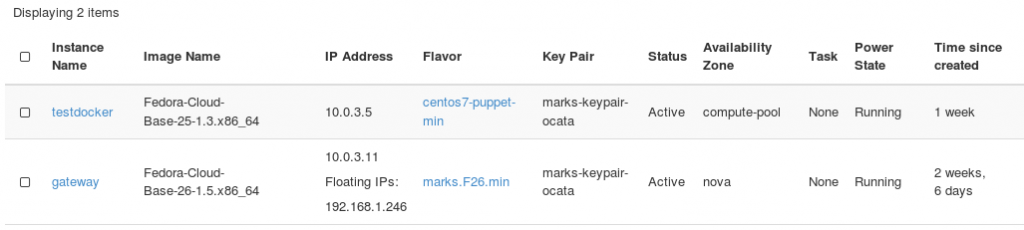

I covered in an earlier post how to setup ssh as a proxy on a gateway server to access instances via that proxy, but as that would always connect to servers using my userid regardless of what external user connected via the proxy it was not ideal, and required me to remember to start the proxy process on the gateway server as well.

So I have just resorted to extreme laziness. On any of my desktop servers that are likely to want to logon to any of the instances I have just added a default route to my openstack internal network range via the floating ip assigned to the gateway instance.

[root@phoenix bin]# route add -net 10.0.3.0/24 gw 192.168.1.246 [root@phoenix .ssh]# ssh -i ./marks-keypair-ocata.pem fedora@10.0.3.5 X11 forwarding request failed on channel 0 [fedora@testdocker ~]$ exit logout Connection to 10.0.3.5 closed.

Of course every time I delete/rebuild the gateway server I will have to update the scripts on my desktops that add the route; but quite honestly I got sick of having to logon to the gateway server in order to logon to internal instances (which required my ssh key be copied to the gateway server each time it was rebuilt anyway, easier to update the route add scripts).

The ssh key I now distribute to my desktops via puppet, plus the script to add the route. So I only have to update in one place on the many occasions I rebuild the gateway server. Extreme laziness.